Welcome to this week's edition of Overclocked!

This week, Albania rolls out a virtual AI “minister” to clean up public procurement, raising real questions. Next, the FTC opens a sweeping inquiry into companion chatbots, with special focus on kids’ safety and data. Let’s dive in ⬇️

In today’s newsletter ↓

🏛️ Albanian Government tests a virtual minister

🛡️ Regulators probe companion chatbots

🧠 Microsoft weighs Anthropic as OpenAI backup

🖼️ Seedreem 4.0 rivals Nano Banana

🧭 Weekly Challenge: Use AI to understand privacy policies

🧑⚖️ Albania Debuts AI Minister

Albania has introduced a virtual AI “minister” named Diella to oversee public procurement, with the aim of reducing corruption in the awarding of tenders.

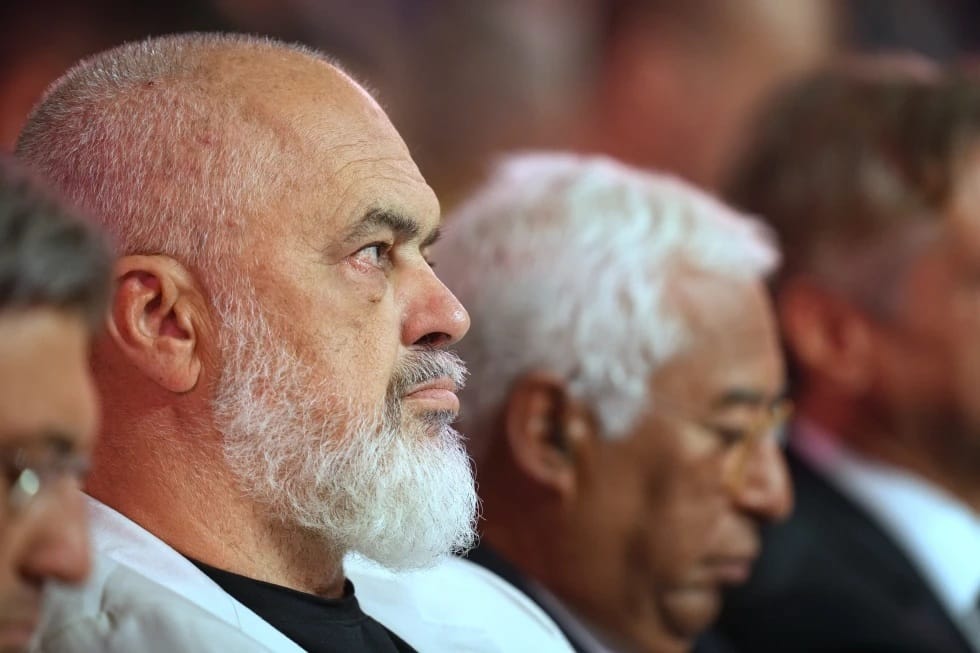

Prime Minister Edi Rama presented Diella as the newest member of his cabinet, elevating a digital assistant that has existed on the state e-Albania portal since January into a formal role tied to contracting decisions. Officials frame the move as a way to make tenders more transparent and less vulnerable to bribery, threats, or conflicts of interest.

Credit: AP News

🔧 How It Works

Diella is described as an AI decision aid that will evaluate bids and, over time, take on responsibility for awarding tenders. The government has not published full technical or governance details. Key open questions include who trains and audits the models, where data comes from, and how conflicts, appeals, or suspected errors will be handled. Reporting notes Diella’s roots as a citizen-facing assistant, now expanded into a procurement function with national impact.

🧭 What Is and Isn’t New

This is not a legislature and not an elected official. It is an executive-branch tool pointed at procurement, one of the most corruption-prone areas of government. The “first” being claimed here is a cabinet-level assignment for an AI agent. Legal experts and opposition figures are already asking how the role will be formalized and what human oversight persists, especially around accountability for errors or favoritism.

📈 Why Now

Albania has pledged progress toward EU accession by 2030, which puts intense pressure on procurement integrity. An AI review layer promises speed and consistency, and, politically, it signals a tough stance on graft. The risk is automation bias: if vendors or officials treat the system’s outputs as unquestionable, mistakes could be amplified. Watch for concrete safeguards—independent audits, public logs of award rationales, and clear appeal paths.

Bottom line: a bold experiment in “algorithmic governance,” with credibility hinging on transparency and oversight rather than slogans.

🛡️ FTC Probes Companion Chatbots

The Federal Trade Commission has launched a 6(b) study into seven companies that run consumer “companion” chatbots, seeking detailed reports on safety testing, data practices, monetization, and guardrails—especially for children and teens.

The orders went to Alphabet, Character Technologies, Instagram, Meta, OpenAI, Snap, and xAI. A 3-0 Commission vote authorized the inquiry, which is exploratory but can inform future enforcement.

📜 What the Orders Seek

The FTC is asking how these products measure and mitigate harms; how characters are developed and approved; how disclosures and ads are handled; and how companies limit use by minors and comply with COPPA.

It also wants specifics about input processing, output generation, and whether personal data from intimate chats is shared or reused. The scope covers both product design (parasocial “friend” dynamics) and business models that incentivize stickiness.

⏱️ Next Steps From the FTC

A 6(b) inquiry compels detailed answers and lets the agency map industry patterns. The Commission can publish findings, issue policy guidance, or refer matters for enforcement. Expect attention to how these companies verify ages, disclose paid features, substantiate wellness claims, and honor refund or deletion requests.

🔎 Areas Under the Microscope

Retention periods for chats and media, third-party sharing for ads or analytics, creation of biometric data, disclosures around paid intimacy features, the presence of dark patterns, and clarity of parental controls. Also watch for transparency reports that show incident counts, response times, and changes made after complaints. This level of transparency is rare in the AI industry, but is now clearly required.

🧰 Practical Moves for Families

Turn on age limits, disable contact import, lock in-app purchases, and review data sharing. Avoid syncing sensitive chats to cloud backups. Ask for data access or deletion using the app’s request tools, and save a copy of the confirmation for your records.

The Weekly Scoop 🍦

🎯 Weekly Challenge: Tame Your Terms

If you’re tired of reading (or not reading) the data usage or privacy policy terms for every app you use, here’s how to distill them into a quick and understandable read so you know exactly what you’re signing up for.

Challenge: Turn one long privacy policy into a pocket-sized “terms card” you actually understand.

Here’s what to do:

📌 Step 1: Pick a service

Choose any AI app or companion bot you use weekly. Save the link to its privacy policy or data-use page.

📝 Step 2: Ask for a card

Paste the link into your AI of choice and ask: “Summarize this as a one-screen card: what data is collected, who sees it, how to opt out, how to delete, and one risk I should know.”

🔍 Step 3: Add proof

Tell the AI to pull exact excerpts and section anchors for each claim on the card, so you can tap back to the source.

⚙️ Step 4: Personalize settings

Have the AI list the three settings you should change right now, with step-by-step taps. Do them.

💾 Step 5: Save and repeat

Title it “Terms card AppName Date” and save it in Notes. Make two more this week for your most-used services.

By the weekend you will know what you share, how to dial it down, and how to delete it when you are done.

That’s it for this week! Are AI politicians a step in the right direction, and will the FTC help curb the proliferation of AI chatbots inappropriately interacting with children? Hit reply and let us know your thoughts.

Zoe from Overclocked